When maximum security and business continuity are required the Backup process becomes a strategic meaning. To fulfil this special role, the P5 server can have a redundant failover instance.

Archiware P5 can be configured to run on two separate host computers, such that one is able to take over from the other in the event of a failure. This configuration requires some manual actions in the event of the failure itself, and specific setup steps that we will describe below. Automation is possible by using scripts, and will differ depending upon the platform and setup being used. Only the manual steps are described in this article, but can form the basis of your own scripts.

This article is relevant for P5 Archive, P5 Backup and P5 Synchronize. P5 Backup2Go cannot be used with failover due to the specific requirements of the storage repository.

Executive Summary

The trick to understanding how to allow a P5 installation to move/failover from one host to another is that a P5 server requires access to config/ and log/ folders within it’s P5 software installation directory. These two folders contain everything unique to the P5 server installation. Only one P5 software installation must modify these folders at a time. Therefore, to fail over one P5 host to another, these folders must be available to both, via shared storage or some replication workflow.

General Points

Important – under normal operation, P5 should be running only on the Primary P5 Server. When failover takes place, the P5 software can start up on the Cold-standby server. If P5 is running on both servers at the same time, the configuration and indexes will become damaged.

As far as is possible, try to keep the following elements between the Primary P5 Server and Cold-standby Server the same:

- Server hardware

- Connections to storage. E.g. PCI/FCAL/SAS/Thunderbolt

- Network interfaces

- Operating system and patch level

- Mount-points or drive mappings of local and network volumes and shares

- Installed P5 versions

- Location of the P5 installation directory

Generally, the closer the setup of these two servers, the fewer problems you might anticipate having. The version of P5 on both servers should be kept the same, if upgrading the Primary P5 Server, upgrade the Cold-standby at the same time. Establish the software starts up successfully on the Cold-standby server, and then shut P5 down again.

Note: P5 should not be running on the Cold-standby server until the failover occurs.

Requirements for a cold standby solution

1. Connection to shared storage

Any shared storage attached via TCP/IP or Fibre Channel (FC) that P5 needs to interact with, should be mounted consistently on both the Primary P5 Server and the Cold-Standby P5 Server. By ‘consistent’, we mean that the mount-point or other method of access should be the same on both of these machines.

This storage might be used in various different ways by P5. For example, it might contain:

- customer data that is being backed up, archived or replicated by P5 jobs.

- one or more ‘P5 Disk Library’, used as target storage for archive and backup jobs.

- P5’s configuration databases and log files, such that they’re available to both servers.

However this storage is attached, whether a network filesharing protocols is used or it’s accessed as a SAN volume via iSCSI or FC, both servers should be able to access it in the same way.

In addition to storage attached to the P5 server in this way, backup, archive and replication jobs are also able to access storage connected to P5 client machines. Nothing special needs to be done here to accommodate access to these clients when the failover takes place. The Cold-Standby P5 Server will access these P5 clients in the same way that the Primary P5 Server did, via the TCP/IP network.

2. Connection to destination storage

P5 Backup and P5 Archive will commonly write to LTO Tape, disk storage or cloud storage. Connection to this storage from the Primary P5 Server and the Cold-Standby P5 Server should be handled as follows.

LTO tape devices are connected to servers using either Fibre Channel (FC) or SAS interconnects. FC is a network (or Fabric) where one tape device could be simultaneously connected to both the Primary P5 Server and the Cold-Standby P5 Server. This allows for failover to take place without having to physically disconnect the tape hardware from the first server and reconnect to the second. In this scenario, P5 should not be running on the Cold-Standby P5 Server however, so that only the Primary P5 Server is controlling the tape hardware until a failover takes place.

If the destination storage is disk, then this disk should be mounted on both the Primary P5 Server and the Cold-Standby P5 Server. This is straightforward when using standard network protocols to mount the storage.

If cloud storage is being used as the destination, then access to the cloud storage is defined within the P5 configuration. Provided both the Primary P5 Server and the Cold-Standby P5 Server have a connection to the cloud storage, it will work from both servers just the same.

3. P5 configuration and indexes – two different options

Both the Primary P5 Server and the Cold-Standby P5 Server should have the same version of P5 installed. The default installation directories are as follows:

- Windows – C:\Program Files\ARCHIWARE\Data_Lifecycle_Management_Suite

- Linux/macOS – /usr/local/aw

Within the above P5 installation directory, there are two important folders, the contents of these folders will change on the Primary P5 Server each time a job runs, or a change is made to the configuration.

- config/ – this folder contains the configuration database (P5’s settings) and the backup and archive indexes that track all files that have been backed up and archived, and the location of those files on the target storage.

- log/ – this folder contains the historical job history information, available to browse in the P5 web-admin interface. A new job is created each time a P5 server performs some activity.

At the point of failover, the contents of these two folders needs to be up to date on the Cold-Standby P5 Server, so that it can pick up where the failed P5 server left off.

As the contents of these folders changes on the Primary P5 Server, these two folders either need to be shared between both P5 servers, or a workflow created whereby the Cold-Standby P5 Server receives a copy of these folders from the Primary P5 Server on a regular basis. Let’s look in more detail at how this can be achieved.

3.1. Option 1 – Hosting P5 config on shared storage

In this setup, the Primary P5 Server and the Cold-Standby P5 Server use shared storage for the config/ and log/ folders, e.g. an NFS4/SMB share or SAN volume. The P5 application software can be installed in the usual way on the system partition alongside the operating system, but these two folders within the P5 installation directory should be hosted on the shared storage. On Linux or macOS this can be achieved using symbolic links. On Windows junctions or symbolic links can be used. This shared storage should be hosted by a host other than the Primary P5 Server and the Cold-Standby P5 Server. Because P5 is writing and modifying database files within the config/ directory, this shared storage should have good enough performance that the speed of access to these databases is not degraded.

3.2. Option 2 – Hosting P5 config on local storage – replicate p5 install

Both Primary P5 Server and the Cold-Standby P5 Server use their own local storage for configuration and indexes.

The configuration, indexes (config/) and logs (log/) folders are transferred periodically from the Primary P5 Server to the Cold-Standby P5 Server, on a scheduled basis, while no P5 jobs are running. This can be achieved with Archiware P5 Synchronize, for example. See Appendix A for more details of how this works.

4. General points

In order that only the live P5 installation is modifying files within these folders, the P5 installation on the Cold-Standby P5 Server should not be running, until the failover needs to take place. At which point, where possible, the P5 software should be shutdown on the Primary P5 Server before it is started up on the Cold-Standby P5 Server.

It may be that a clean shutdown of the P5 software on the Primary P5 Server is not possible. In this case, some databases may not have been closed if jobs were running at the point of failure. When the databases are opened again by the P5 installation on the Cold-Standby P5 Server, it will attempt to fix any database issues on startup.

Worked Examples

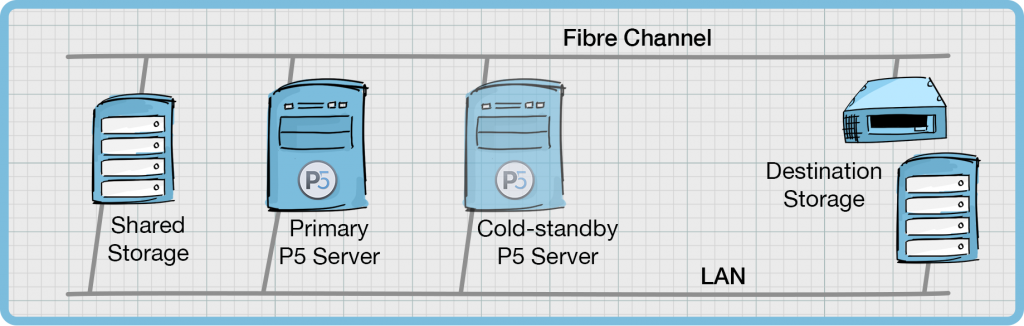

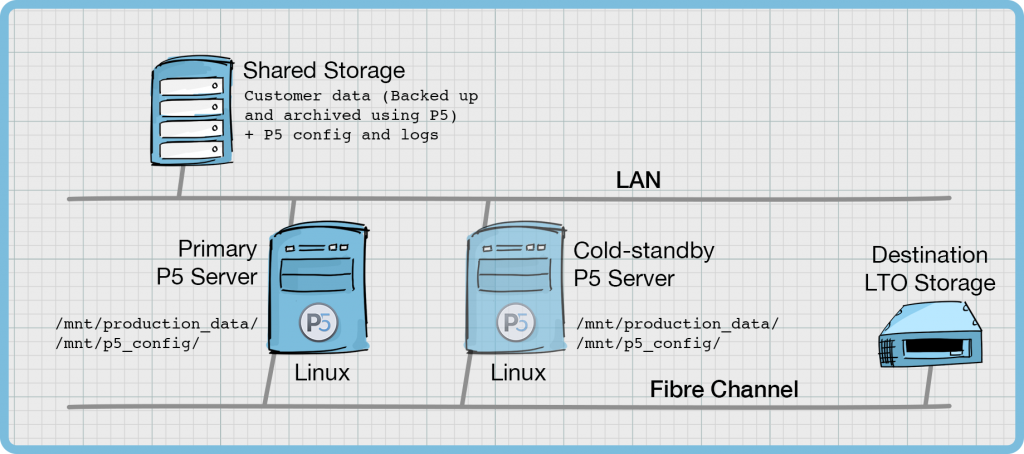

Worked Example #1 – Failover with Linux P5 servers, LTO tape library, P5 config/indexes residing on shared storage.

In this example, we will use two Linux hosts, both connecting to shared storage via NFS/SMB. From this shared storage, we have two shares mounted, ‘Production Data’ is the customers working storage which is being backed up and archived with P5. The ‘P5 Config’ share is where the configuration and log directories are located. They are mounted at the following paths:

/mnt/production_data/

/mnt/p5_config/

P5 is installed on both the Primary P5 Server and the Cold-Standby P5 Server at the standard installation path /usr/local/aw. Symlinks have been created for config/ and log/ within this installation directory such that the contents are located on the shared storage as follows:

ls -al /usr/local/awtotal 416

drwxr-xr-x 18 root staff 576 7 Apr 16:42 .

drwxr-xr-x 19 root wheel 608 25 Mar 20:45 ..

-rw-r--r-- 1 root wheel 173478 6 Mar 09:08 ChangeLog

-rw-r--r-- 1 root wheel 2889 10 Jan 11:18 README

lrwxr-xr-x 1 root staff 22 3 Apr 14:36 bin -> binaries/Linux/unknown/64

drwxr-xr-x 3 root wheel 96 10 Jan 11:21 binaries

lrwxr-xr-x 1 root staff 31 7 Apr 16:42 config -> /mnt/p5_config/config

drwxr-xr-x 19 root wheel 608 12 Mar 15:56 etc

drwxr-xr-x 4 root wheel 128 12 Mar 15:56 lib

-rw-r--r-- 1 root wheel 1461 10 Jan 11:18 license.txt

lrwxr-xr-x 1 root staff 28 7 Apr 16:42 log -> /mnt/p5_config/log

drwxr-xr-x 3 root wheel 96 10 Jan 11:46 modules

-rwxr-xr-x 1 root wheel 1722 23 Jan 10:17 ping-server

drwxr-xr-x 3 root wheel 96 10 Jan 11:18 servers

-rwxr-xr-x 1 root wheel 15572 27 Jan 21:41 start-server

-rwxr-xr-x 1 root wheel 3228 10 Jan 11:19 stop-server

drwxrwxrwx 3 root staff 96 7 Apr 10:01 temp

-rwxr-xr-x 1 root wheel 3331 23 Jan 15:57 uninstall.shNote: One should be aware that when the P5 software starts up, the /mnt/p5_config/ directory must be successfully mounted, such that P5 is able to locate its configuration and log folders. If there’s a delay mounting the storage, the P5 startup scripts might also require modifying to cause P5 startup to also be delayed.

The LTO tape library is connected via Fibre Channel to both the Primary P5 Server and the Cold-Standby P5 Server. P5 is only active (running) on the Primary P5 Server, so the tape library is only being controlled by this single host.

At the point of failover, as the Primary P5 Server goes off-line, P5 should be manually started up on the Cold-Standby P5 Server. As it starts up, it will read the configuration from the shared storage. The path to the tape hardware will not be (or is very unlikely to be) consistent across both hosts. Therefore the tape hardware will need to be removed from the P5 configuration (via the web-admin interface) on the Cold-Standby P5 Server, and re-added. Since the tape hardware is already physically connected via Fibre Channel, it should be found by P5 immediately and this process will only take a couple of minutes.

Note: Because of similarities between P5 running on Linux and macOS, the above configuration is also valid for macOS hosts. Note that the paths for mounted storage on macOS are via the /Volumes/ directory.

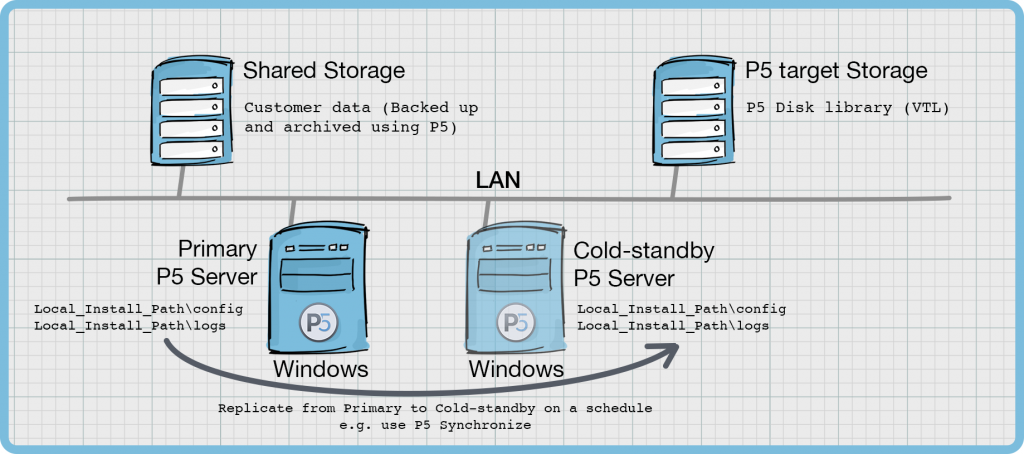

Worked Example #2 – Failover with Windows P5 servers, P5 config/indexes stored locally on C: drive, target backup/archive storage on disk

In this example, we will use two Windows hosts, connected to two separate storage devices over the IP network. One storage is where the customers data is hosted, it is this storage that is backed up and archived using P5. The second shared storage is used as the target storage for P5 jobs, where a disk_library (or VTL) is configured.

Both Windows hosts can maintain permanent connections to both storage devices. The connections should use consistent mount points across both servers, whether drive letters or UNC paths are used.

Since the P5 installation directories are both in the standard installation directory on the respective C: partitions on the Primary P5 Server and Cold-Standby P5 Server, it is required to perform replication of these two folders between these two hosts.

Local_Install_Path\config

Local_Install_Path\logs

Note that the ‘Local_Install_Path’ on a standard Windows installation is:

C:\Program Files\ARCHIWARE\Data_Lifecycle_Management_Suite

Therefore some external tool should be used to keep these two folders updated. One recommendation is to use Archiware’s P5 Synchronize product here, which can replicate these folders each day on a schedule set to avoid running the replication task while any P5 jobs are running. See Appendix-A for further detail on using P5 Synchronise here.

Note that P5 jobs will be modifying the config/ and logs/ folders while they run and so the replication of these folders should be avoided while P5 jobs are running.

Appendix A – Using Archiware P5 Synchronize to replicate config and logs between installations

This requires that P5 is running on both the Primary P5 Server and the Cold-Standby P5 Server and so some extra steps are required to make this possible.

The P5 Sync product should be activated (licensed) on the Primary P5 Server, and a Sync configuration setup to copy the config/ and logs/ directories from the localhost machine to the remote client.

The remote client will be the Cold-Standby P5 Server, where P5 is running but no configuration needs to be made. Just a default installation performed and the product left running and listening for connections over TCP/IP.

Therefore when the config/ and logs/ directories are copied over by the P5 Sync job, they should not overwrite the config/ and logs/ directories of the running installation. They should therefore be copied into a separate folder on the target P5 machine. For example:

source: /usr/local/aw/config/ destination: /usr/local/aw/coldspare/config/

source: /usr/local/aw/logs/ destination: /usr/local/aw/coldspare/logs/

The destination Cold-Standby machine will now have both it’s ‘live’ running config/ and logs/ and the copy from the Primary P5 Server, stored in the ‘coldspare’ folder.

Now, in the event of the failover to the Cold-Standby machine, a script should be executed that stops the P5 software on this host, moves the running config/ and logs/ directories elsewhere, and copies these two folders from the ‘coldspare’ directory back into /usr/local/aw/ prior to starting P5 up on this host.

Appendix B – Known issues

If the P5 installation includes an Archive index (database) that’s stored outside of the standard directory structure (/config/index/), the location of this index should be taken care of via the shared storage or replication techniques detailed in the article.